Real Exam Questions and Answers as experienced in Test Center

DHORT Braindumps with 100% Guaranteed Actual Questions | https:alphernet.com.au

DHORT test - Discover Health Occupations Readiness Test Updated: 2024 | ||||||||

| killexams.com DHORT Brain Dumps with Real Questions | ||||||||

|

||||||||

|

||||||||

|

Exam Code: DHORT Discover Health Occupations Readiness Test test January 2024 by Killexams.com team | ||||||||

DHORT Discover Health Occupations Readiness Test Exam Details: - Number of Questions: The number of questions in the Discover Health Occupations Readiness Test (DHORT) can vary depending on the specific version of the exam. Typically, the test consists of multiple-choice questions, and the exact number of questions may range from 75 to 100. - Time: Candidates are usually given a set time limit to complete the DHORT exam, which is typically around 2 to 3 hours. It is important to manage time effectively to ensure all questions are answered within the allocated time. Course Outline: The DHORT is designed to assess the readiness of individuals for health occupation programs or careers. While the specific course outline may vary, the exam generally covers the following key areas: 1. Math and Science Skills: - Basic math skills (e.g., arithmetic, fractions, decimals) - Measurement conversions - Understanding of scientific concepts (e.g., biology, chemistry) 2. Language and Communication Skills: - reading comprehension - Vocabulary and terminology relevant to health occupations - Writing skills and grammar 3. Critical Thinking and Problem Solving: - Analytical and logical reasoning - Ability to interpret and analyze information - Problem-solving skills 4. Health Occupations Knowledge: - Understanding of different health occupations and their roles - Knowledge of medical terminology - Familiarity with healthcare settings and practices Exam Objectives: The objectives of the DHORT are to: - Assess the candidate's foundational knowledge and skills in math, science, language, and critical thinking relevant to health occupations. - Determine the candidate's readiness for entry into health occupation programs or careers. - Identify areas of strengths and weaknesses to guide further education and preparation in health occupations. Exam Syllabus: The specific exam syllabus for the DHORT may vary depending on the organization or institution administering the test. However, the following syllabus are typically included: 1. Math Skills: - Arithmetic operations (e.g., addition, subtraction, multiplication, division) - Fractions, decimals, and percentages - Measurement conversions (e.g., weight, length, volume) 2. Science Knowledge: - Basic biology concepts (e.g., cell structure, human anatomy) - Fundamental chemistry principles (e.g., atoms, elements, chemical reactions) - Health-related scientific terminology 3. Language and Communication: - reading comprehension (understanding and interpreting passages) - Vocabulary relevant to health occupations - Writing skills (grammar, sentence structure, clarity) 4. Critical Thinking and Problem Solving: - Analyzing information and drawing conclusions - Identifying patterns and relationships - Problem-solving scenarios related to health occupations 5. Health Occupations Knowledge: - Overview of various health occupations (e.g., nursing, medical assisting, dental hygiene) - Roles and responsibilities within healthcare settings - Basic understanding of healthcare ethics and professionalism It is important to note that the specific syllabus and depth of coverage may vary based on the organization or institution offering the DHORT exam. Candidates should refer to the official guidelines and materials provided by the administering organization for the most accurate and up-to-date information. | ||||||||

| Discover Health Occupations Readiness Test Medical Occupations test | ||||||||

Other Medical examsCRRN Certified Rehabilitation Registered NurseCCRN Critical Care Register Nurse CEN Certified Emergency Nurse CFRN Certified Flight Registered Nurse CGFNS Commission on Graduates of Foreign Nursing Schools CNA Certified Nurse Assistant CNN Certified Nephrology Nurse CNOR Certified Nurse Operating Room DANB Dental Assisting National Board Dietitian Dietitian EMT Emergency Medical Technician EPPP Examination for Professional Practice of Psychology FPGEE Foreign Pharmacy Graduate Equivalency NBCOT National Board for Certification of Occupational Therapists - 2023 NCBTMB National Certification Board for Therapeutic Massage & Bodywork NET Nurse Entrance Test NPTE National Physical Therapy Examination OCN Oncology Certified Nurse - 2023 PANCE Physician Assistant National Certifying VTNE Veterinary Technician National Examination (VTNE) CNS Clinical Nurse Specialist NBRC The National Board for Respiratory Care AHM-540 AHM Medical Management AACN-CMC Cardiac Medicine Subspecialty Certification AAMA-CMA AAMA Certified Medical Assistant ABEM-EMC ABEM Emergency Medicine Certificate ACNP AG - Acute Care Nurse Practitioner AEMT NREMT Advanced Emergency Medical Technician AHIMA-CCS Certified Coding Specialist (CPC) (ICD-10-CM) ANCC-CVNC ANCC (RN-BC) Cardiac-Vascular Nursing ANCC-MSN ANCC (RN-BC) Medical-Surgical Nursing ANP-BC ANCC Adult Nurse Practitioner APMLE Podiatry and Medical BCNS-CNS Board Certified Nutrition Specialis BMAT Biomedical Admissions Test CCN CNCB Certified Clinical Nutritionist CCP Certificate in Child Psychology CDCA-ADEX Dental Hygiene CDM Certified Dietary Manager CGRN ABCGN Certified Gastroenterology Registered Nurse CNSC NBNSC Certified Nutrition Support Clinician COMLEX-USA Osteopathic Physician CPM Certified Professional Midwife CRNE Canadian Registered Nurse Examination CVPM Certificate of Veterinary Practice Management DAT Dental Admission Test DHORT Discover Health Occupations Readiness Test DTR Dietetic Technician Registered FNS Fitness Nutrition Specialist MHAP MHA Phlebotomist MSNCB MSNCB Medical-Surgical Nursing Certification NAPLEX North American Pharmacist Licensure Examination NCCT-TSC NCCT Technician in Surgery NCMA-CMA Certified Medical Assistant NCPT National Certified Phlebotomy Technician (NCPT) NE-BC ANCC Nurse Executive Certification NNAAP-NA NNAAP Nurse Aide NREMT-NRP NREMT National Registered Paramedic NREMT-PTE NREMT Paramedic Trauma Exam OCS Ophthalmic Coding Specialist PANRE Physician Assistant National Recertifying Exam PCCN AACN Progressive Critical Care Nursing RDN Registered Dietitian VACC VACC Vascular Access WHNP Women Health Nurse Practitioner AACD American Academy of Cosmetic Dentistry RPFT Registered Pulmonary Function Technologist ACLS Advanced Cardiac Life Support - 2023 GP-Doctor General Practitioner (GP) Doctor GP-MCQS Prometric MCQS for general practitioner (GP) Doctor INBDE Integrated National Board Dental Examination (Day 1 exam) Podiatry-License-Exam-Part-III Podiatry License exam Part III - 2023 | ||||||||

| DHORT certified experts recommend killexams.com DHORT dumps questions that contains real exam questions and vce exam simulator for practice test. It is now quite easy to memorize the questions and answers, practice them with vce exam simulator and you are ready for real DHORT test. Now register your test and get certified instantly. | ||||||||

| Medical DHORT Discover Health Occupations Readiness Test https://killexams.com/pass4sure/exam-detail/DHORT Question: 97 What property of a metal refers to its ability to be hammered into sheets? A. Ductility B. Thermal conductivity C. Electrical conductivity D. Malleability E. Density Answer: D Malleability refers to a metal's ability to be hammered into sheets. Question: 98 Which of the following elements is most easily oxidized? A. Nitrogen B. Fluorine C. Lithium D. Neon E. Sulfur Answer: C Easily oxidized elements readily release electrons. The loss of an electron allows these elements to form a stable valence electron configuration. In the context of this question, lithium is the most willing to release an electron, so it is the most easily oxidized. Question: 99 The hamstrings are responsible for flexing which joint? A. Knee B. Hip C. Shoulder D. Elbow Answer: A The hamstrings are responsible for flexing the knee joint. The hamstrings consist of biceps femoris, semimembranosus and semitendinosus. They also assist in knee rotation. Question: 100 What type of bonding does a molecule of NaCl display? A. Ionic B. Polar covalent C. Nonpolar covalent D. Metallic E. Covalent metallic Answer: A NaCl is composed of two ions, Na+ and Cl-. Therefore, the molecule displays ionic bonding. Question: 101 Which of the following compounds is classified as a metallic oxide? A. H2O B. Na2O C. CO2 D. NO2 E. SO2 Answer: B Metallic oxides consist of an oxygen atom bound to a metal. The only metal present in this question is sodium, so Na2O must be a metallic oxide. Question: 102 A saturated solution of NaCl is heated until more solute can be dissolved. How is this solution best described? A. Dilute B. Immiscible C. Supersaturated D. Unsaturated E. Hydrophobic Answer: C When a saturated solution is heated so more solute can be dissolved, the solution is described as supersaturated. Supersaturated solutions are typically unstable, and the solute can crash out of solution if a seed crystal is provided. Question: 103 A 40.0 gram trial of a radioactive element decays to 5.0 grams in 15 hours. What is the half-life of this element? A. 3 hours B. 2 hours C. 6 hours D. 7.5 hours E. 5 hours Answer: E In 15 hours, this substance has decayed to 1/8 of its original mass. In other words, the substance has progressed through three half-lives (1/2 x 1/2 x 1/2 = 1/8). Thus, a single-half life for this substance is 5 hours. Question: 104 Which of the following compounds contains a double bond? A. C2H6 B. C2H4 C. C3H8 D. CH4 E. C4H10 Answer: B Hydrocarbons with the formula CnH2n contain double bonds. The only compound with this formula, C2H4, must contain a double bond. Question: 105 What is the duodenum responsible for? A. Breaking down food in the small intestines B. Cleans the blood C. Creates bile D. Absorbs oxygen Answer: A The duodenum is responsible for breaking down food in the small intestines. Question: 106 80.0 grams of NaOH is dissolved in 3.0 moles of H20. What is the mole fraction of NaOH in this solution? A. .20 B. .37 C. .40 D. .64 E. .79 Answer: C The mole fraction for a compound indicates (moles of given compund) / (total moles of system). In this question, there are two moles of NaOH and five total moles in the system. Thus, the mole fraction is expressed as 2/5, or .40. For More exams visit https://killexams.com/vendors-exam-list Kill your exam at First Attempt....Guaranteed! | ||||||||

Enlarge / Dr. Greg House has a better rate of accurately diagnosing patients than ChatGPT.

ChatGPT is still no House, MD. While the chatty AI bot has previously underwhelmed with its attempts to diagnose challenging medical cases—with an accuracy rate of 39 percent in an analysis last year—a study out this week in JAMA Pediatrics suggests the fourth version of the large language model is especially bad with kids. It had an accuracy rate of just 17 percent when diagnosing pediatric medical cases. The low success rate suggests human pediatricians won't be out of jobs any time soon, in case that was a concern. As the authors put it: "[T]his study underscores the invaluable role that clinical experience holds." But it also identifies the critical weaknesses that led to ChatGPT's high error rate and ways to transform it into a useful tool in clinical care. With so much interest and experimentation with AI chatbots, many pediatricians and other doctors see their integration into clinical care as inevitable. The medical field has generally been an early adopter of AI-powered technologies, resulting in some notable failures, such as creating algorithmic racial bias, as well as successes, such as automating administrative tasks and helping to interpret chest scans and retinal images. There's also lot in between. But AI's potential for problem-solving has raised considerable interest in developing it into a helpful tool for complex diagnostics—no eccentric, prickly, pill-popping medical genius required. In the new study conducted by researchers at Cohen Children’s Medical Center in New York, ChatGPT-4 showed it isn't ready for pediatric diagnoses yet. Compared to general cases, pediatric ones require more consideration of the patient's age, the researchers note. And as any parent knows, diagnosing conditions in infants and small children is especially hard when they can't pinpoint or articulate all the symptoms they're experiencing. For the study, the researchers put the chatbot up against 100 pediatric case challenges published in JAMA Pediatrics and NEJM between 2013 and 2023. These are medical cases published as challenges or quizzes. Physicians reading along are invited to try to come up with the correct diagnosis of a complex or unusual case based on the information that attending doctors had at the time. Sometimes, the publications also explain how attending doctors got to the correct diagnosis. Missed connectionsFor ChatGPT's test, the researchers pasted the relevant text of the medical cases into the prompt, and then two qualified physician-researchers scored the AI-generated answers as correct, incorrect, or "did not fully capture the diagnosis." In the latter case, ChatGPT came up with a clinically related condition that was too broad or unspecific to be considered the correct diagnosis. For instance, ChatGPT diagnosed one child's case as caused by a branchial cleft cyst—a lump in the neck or below the collarbone—when the correct diagnosis was Branchio-oto-renal syndrome, a genetic condition that causes the abnormal development of tissue in the neck, and malformations in the ears and kidneys. One of the signs of the condition is the formation of branchial cleft cysts. Overall, ChatGPT got the right answer in just 17 of the 100 cases. It was plainly wrong in 72 cases, and did not fully capture the diagnosis of the remaining 11 cases. Among the 83 wrong diagnoses, 47 (57 percent) were in the same organ system. Among the failures, researchers noted that ChatGPT appeared to struggle with spotting known relationships between conditions that an experienced physician would hopefully pick up on. For example, it didn't make the connection between autism and scurvy (Vitamin C deficiency) in one medical case. Neuropsychiatric conditions, such as autism, can lead to restricted diets, and that in turn can lead to vitamin deficiencies. As such, neuropsychiatric conditions are notable risk factors for the development of vitamin deficiencies in kids living in high-income countries, and clinicians should be on the lookout for them. ChatGPT, meanwhile, came up with the diagnosis of a rare autoimmune condition. Though the chatbot struggled in this test, the researchers suggest it could Excellerate by being specifically and selectively trained on accurate and trustworthy medical literature—not stuff on the Internet, which can include inaccurate information and misinformation. They also suggest chatbots could Excellerate with more real-time access to medical data, allowing the models to refine their accuracy, described as "tuning." "This presents an opportunity for researchers to investigate if specific medical data training and tuning can Excellerate the diagnostic accuracy of LLM-based chatbots," the authors conclude. Can generative AI potentially pass a mental health therapist or mental health counseling licensing ... [+] gettyIn today’s column, I will be closely looking at whether generative AI could potentially pass an official mental health counseling licensing exam. This is part of my ongoing in-depth series about generative AI or large language models (LLMs) that are or can be anticipated to be used for mental health guidance or advisement. Before I dive into today’s particular topic, I’d like to provide a quick background for you so that you’ll have a suitable context about the arising use of generative AI for mental health advisement purposes. I’ve mentioned this in prior columns and believe the contextual establishment is essential overall. If you are already familiar with the overarching background on this topic, you are welcome to skip down below to the next section of this discussion. The use of generative AI for mental health treatment is a burgeoning area of tremendously significant societal ramifications. They are witnessing the adoption of generative AI for providing mental health advice on a widescale basis, yet little is known about whether this is beneficial to humankind or perhaps contrastingly destructively adverse for humanity. Some would affirmatively assert that they are democratizing mental health treatment via the impending rush of low-cost always-available AI-based mental health apps. Others sharply decry that they are subjecting ourselves to a global wanton experiment in which they are the guinea pigs. Will these generative AI mental health apps steer people in ways that harm their mental health? Will people delude themselves into believing they are getting sound mental health advice, ergo foregoing treatment by human mental therapists, and become egregiously dependent on AI that at times has no demonstrative mental health improvement outcomes? Hard questions are aplenty and not being given their due airing. Furthermore, be forewarned that it is shockingly all too easy nowadays to craft a generative AI mental health app, and just about anyone anywhere can do so, including while sitting at home in their pajamas and not knowing any bona fide substance about what constitutes suitable mental health therapy. Via the use of what are referred to as establishing prompts, it is easy-peasy to make a generative AI app that purportedly gives mental health advice. No coding is required, and no software development skills are needed. We sadly are faced with a free-for-all that bodes for bad tidings, mark my words. I’ve been hammering away at this subject and hope to raise awareness about where they are and where things are going when it comes to the advent of generative AI mental health advisement uses. If you’d like to get up-to-speed on my prior coverage of generative AI across a wide swath of the mental health sphere, you might consider for example these cogent analyses:

Here’s how I will approach today’s discussion. First, I will introduce you to a pioneering research study that sought to assess whether generative AI could potentially pass an exam taken by medical school students as part of their pursuit of achieving their medical degree. The exam is known as the United States Medical Licensing exam (USMLE). This study received a great deal of headlines since it showcased that generative AI seems to do well on the arduous medical exams taken by budding doctors. Next, I will share with you some salient details about an exam for mental health professionals known as the National Clinical Mental Health Counseling Examination (NCMHCE). I’m guessing you might be wondering whether generative AI might be able to do well on that type of exam. Great question, thanks. I opted to use a popular generative AI app called ChatGPT to try out a half-dozen questions from the NCMHCE. Please note that this was merely an official trial set and not by any means the full exam. Would you be surprised to know that the generative AI was able to successfully answer many of the sampled trial questions? I provide some important caveats and limitations about this mini experiment of sorts, and I want to emphasize this was principally done on an ad hoc basis and merely intended to be illustrative. Here’s the deal. Please do not jump the shark on this matter. Hold your horses. My mainstay aims here are simply to inspire others to do a deep dive on this and perform a fully comprehensive rigorous research study of an akin nature, perhaps modeled somewhat on the same approach taken by the study on the USMLE or similar such professional licensing domains. Anyway, I believe you will find this interesting, engaging, and possibly whet your appetite to find out more on these topics. My discussion is yet another angle to considering where they are and where things are going pertaining to generative AI and the field of mental health therapy. Please buckle up and prepare yourself for quite a ride. Generative AI And Medical School Standardized Licensing Exam Let’s talk about tests. We generally assume that to practice medicine a test of some kind should be required to attest to the proficiency of the person that will be serving as a medical professional. I’d like to start by discussing perhaps one of the most famous such medical proficiency tests known as the United States Medical Licensing Examination (USMLE). This is the test typically expected of those attaining a medical degree in the United States. The USMLE was devised to aid in standardizing upon one major medical examination test that would be acceptable across every state and ensure that MDs were meeting the same set of standards. The test is composed of three separate stages and is taken during medical school and also upon graduation from medical school. Here’s some additional detail as noted on the USMLE website:

Humans take the USMLE to showcase their proficiency in medicine. When you encounter a medical doctor, you are likely to assume they probably took the test and passed it. On an intuitive basis they realize that having to pass such an arduous test is impressive and helps to provide us comfort that the person knows their stuff when it comes to the medical field. Shift gears. Can generative AI potentially also be proficient enough to pass the USMLE? That’s an interesting and some would say important question worthy of considering. First, some quick background about generative AI. Realize that generative AI is not sentient and only consists of mathematical and computational pattern matching. The way that generative AI works is that a great deal of data is initially fed into a pattern-matching algorithm that tries to identify patterns in the words that humans use. Most of the modern-day generative AI apps were data trained by scanning data such as text essays and narratives that were found on the Internet. Doing this was a means of getting the pattern-matching to statistically figure out which words they use and when they tend to use those words. Generative AI is built upon the use of a large language model (LLM), which entails a large-scale data structure to hold the pattern-matching facets and the use of a vast amount of data to undertake the setup data training. There are numerous generative AI apps available nowadays, including GPT-4, Bard, Gemini, Claude, ChatGPT, etc. The one that is seemingly the most popular would be ChatGPT by AI maker OpenAI. In November 2022, OpenAI’s ChatGPT was made available to the public at large and the response was astounding in terms of how people rushed to make use of the newly released AI app. There are an estimated one hundred million active weekly users at this time. Using generative AI is relatively simple. You log into a generative AI app and enter questions or comments as prompts. The generative AI app takes your prompting and uses the already devised pattern matching based on the original data training to try and respond to your prompts. You can interact or carry on a dialogue that appears to be nearly fluent. The nature of the prompts that you use can be a make-or-break when it comes to getting something worthwhile out of using generative AI and I’ve discussed at length the use of state-of-the-art prompt engineering techniques to best leverage generative AI, see the link here. Shortly after ChatGPT was made publicly available, many AI researchers began to test the AI app by administering various well-known standardized tests to see how the AI app would do. In February 2023, a research study was posted that indicated ChatGPT had performed surprisingly well on the USMLE. The study was entitled “Performance of ChatGPT on USMLE: Potential for AI-Assisted Medical Education Using Large Language Models” by Tiffany H. Kung, Morgan Cheatham, ChatGPT, Arielle Medenilla, Czarina Sillos, Lorie De Leon, Camille Elepaño, Maria Madriaga, Rimel Aggabao, Giezel Diaz-Candido, James Maningo, Victor Tseng, PLOS Digital Health, and posted on February 9, 2023. Here is what the research paper stated overall (excerpts):

Consider mindfully those above-noted remarks from the AI research effort. ChatGPT was able to score either at or near the passing threshold for the three staged USMLE. Thus, an arduous medical proficiency exam that they expect human medical doctors to pass was nearly passed by a generative AI app. Some would decry this result as misleading in the sense that the generative AI was doing this without real “knowledge” akin to what humans seem to possess. The concern is that generative AI is nothing more than a so-called stochastic parrot that mimics human wording and fails to “understand” or “comprehend” what is going on. Nonetheless, the aspect that generative AI could accomplish such a feat is unto itself impressive, even if done via smoke and mirrors as some suggest. The result is additionally surprising because the researchers used ChatGPT out of the box, as it were, namely the generic version of ChatGPT. Another approach would be to add additional data training on the medical field to ChatGPT, but that’s not what they did in this experiment. A generic data-trained generative AI was able to do well on a highly specialized medical domain exam. For more about how generic generative AI can be fine-tuned to specific domains, see my coverage at the link here. Let’s consider a few other detailed aspects about the notable research result and then I’ll move to my next subject of discussion. The research paper noted these salient details (excerpted):

I’d like to bring your attention to a few points made in those excerpts. Notice that the experiment consisted of identifying a trial of publicly available questions associated with the exam. The idea is to usually feed samples of questions and not necessarily an entire test per se. It is important to consider how a trial was chosen and whether the trial is suitably representative of what the full test might contain. Fair is fair. Another fairness consideration is that there is always a chance that the generative AI might have been initially data-trained on the very same questions. If those questions were found when the startup data training took place, you could say it is absurd to feed the same questions into the generative AI. The answers will likely already be known simply due to having seen the questions and their answers beforehand. If you select questions that arose after the cutoff date of the generative AI app’s data training, you are somewhat comfortable that the content wasn’t encountered already. But even that is readily questioned since the questions might have appeared in other guises. Some exams modify old questions and reuse them in later versions of the exam. There is a chance that a new question is close enough to an older question that perhaps this gives the generative AI a leg up on answering the new question. My point is that you need to carefully consider how these experiments are conducted. Overall, make sure to look at what trial was chosen and how appropriate it is. What are the odds that the generative AI has previously encountered the same or similar questions? As much as feasible, the goal is to set a fair and square playing field to see whether the generative AI can genuinely answer questions that have not previously been used as part of the data training effort. You now have a semblance of what takes place when trying to assess generative AI about being able to pass exams such as the pervasive USMLE in the medical domain. Let’s continue their exploration. Generative AI And Mental Health Therapy exam Taking The research study that explored the use of generative AI such as ChatGPT on the USMLE can serve as a role model for similar kinds of studies. The conception is to identify publicly available trial questions, administer the questions to the generative AI, and see how well or poorly the generative AI scores on answering the questions. As much as possible, try to keep the playing field level and fair. I decided to try this quickly for the field of mental health therapy or mental health counseling. There is a well-known exam known as the National Clinical Mental Health Counseling Examination (NCMHCE). trial questions are publicly posted online. I selected some of the trial questions and fed them into ChatGPT. I opted to use ChatGPT due to its immense popularity and it has generally been the default choice of similar research studies. I might note that a more advanced generative AI such as GPT-4 by OpenAI or others would likely do a better job than ChatGPT. In that manner, you could interpret the ChatGPT usage as the floor and that they might expect heightened results by using a more advanced generative AI app. There isn’t an ironclad guarantee that a more advanced generative AI will do better. The odds though are in that direction. We also have to be watchful for in a sense polluting an experiment by perchance using questions that have already been seen by the generative AI during the initial data-training. Furthermore, if the generative AI is hooked up to the Internet, the AI might simply go out and find the questions and their answers, similar to a search engine, rather than trying to directly answer the questions. ChatGPT in that sense is a handy choice because the free version does not readily allow for Internet access to perform its activities and the data training was last cut off in January 2022 (at the time of writing of this discussion). Let’s dive into the ad hoc experiment by first establishing the nature of the mental health therapy or mental health counseling exam. The National Clinical Mental Health Counseling Examination (NCMHCE) is devised and administered via an organization known as the National Board for Certified Counselors, Inc. Here is what the website for the organization says (excerpts):

The gist is that this is a well-known and widely accepted organization, and the exam is likewise well-known and widely accepted. I bring this up in case you read a study that used generative AI on some relatively unknown exam or less than a stellar reputational exam, in which case, you would want to gauge the result of the study as partially on the rigor and standing of the test being given at the get-go. Here is what the website about the NCMHCE says about the exam (excerpts):

Observe some key points mentioned in those excerpts. First, the exam is used to assess entry-level clinical mental health counselors. You might say that this is handy for my ad hoc experiment since I want to focus on the keystone threshold needed to be considered suitably knowledgeable for proceeding to perform mental health therapy with real clients or patients. Other exams might be used to assess more advanced skill levels, but I’m aiming here to start with the usual starting point. I’m sure that other researchers are or will try to do the same for more advanced instances. Second, note that candidates who want to sit for the exam must have a graduate-level degree or higher from an accredited counseling program or as administered by an accredited college or university. This sets the bar higher than perhaps allowing an undergraduate to take the exam or maybe wantonly opening the exam to anyone who wants to take it. They can presume that the test is likely to ask questions of a hard nature. That’s good since they would want to make sure they supply something challenging to generative AI rather than some easy-peasy questions or materials. They might also note that of course, generative AI would not qualify to officially take the exam since it has not met all the criteria to do so. The official exam website provides an NCMHCE Sample Case Study that indicates the case study is considered updated as of March 2023. I selected six trial questions from this trial set. I want to loudly emphasize that this is an ad hoc selection and I do so merely to be illustrative of what might be done on a more rigorous basis. Though the date says March 2023, there of course is a chance that these questions and their answers have been around before that date, for which ChatGPT might have seen before the January 2022 cutoff date. I tried to do various probing into ChatGPT to see if the content had already been prior encountered. By and large, it doesn’t seem to be, but that’s not known for sure, and a deeper analysis would need to be undertaken to ascertain this. For the moment, let’s go with the flow and assume that the trial questions weren’t previously seen by ChatGPT during its data training. The six sampled trial questions cover these six respective topics:

Keep that in mind as I walk you through what ChatGPT provided as answers to the posed questions. The test is essentially based on case studies. For these six sampled trial questions, a case study was provided in the publicly posted material. The case study was fed into ChatGPT for this analysis. Rather than displaying for you the entirety of the case study, I will do a quick recap to bring you up to speed. In this instance, the case study entails a divorced female of age 35 who is first undertaking a mental health counseling session with a mental health therapist who has some background about the client or patient but otherwise, this is the first meeting of the two. The client or patient has already been provisionally diagnosed as having a major depressive disorder. Additional background is given about the client or patient. For example, after her divorce, she began staying in bed quite a lot and moved back in with her mother. She got fired from her job. She has had financial difficulties. Most days, she feels sad, empty, and anxious. She has joint legal custody with her ex-husband of their two children, respectively aged 10 and 12. And so on. That outlines the nature of the underlying case study. Questions And The Answers Generated By The Generative AI I am going to walk you through each of the six multiple-choice questions and also showcase the answers that were generated by ChatGPT so that you can follow along step-by-step. My initiating prompt asks ChatGPT to provide answers plus explain why each chosen answer was considered the correct answer by ChatGPT. Asking for an explanation is not necessary, but I thought getting explanations might be interesting to see. There is also a bit of prompting strategy involved, namely that by asking for an explanation the chances are that a generative AI app might be more extensive in trying to solve a given question or problem, see my discussion at the link here. You could suggest that I was aiding the generative AI by giving an establishing prompt that would urge it to do a better job than otherwise. Whenever you look at research studies doing just about anything with generative AI, make sure to find out what prompts they used. This is a significant factor related to the performance of the generative AI. Studies that fail to showcase their prompts are unfortunately doing a bit of a disservice by not revealing how they got the generative AI to undertake things. The sampled trial questions are based on the case study, and I’ve placed them in quotes to indicate they came from the case study. In some instances, the wording is slightly reworded merely and strictly for purposes of feeding them into ChatGPT. I am purposely not going to speculate or comment on the answers that are given by ChatGPT. I will simply note whether ChatGPT selected the correct multiple-choice selection as stipulated in the trial set. I’m guessing that mental health therapists and mental health counselors will undoubtedly find the explanations of special interest and will indubitably get them extensively mulling over what ChatGPT had to say. Here is my establishing prompt and the answer provided by ChatGPT:

Okay, they are good to go. Here’s the first question: “Q1. What would be the best way for a mental therapist to at initial intake seek to establish a therapeutic relationship with this person?” “A. Reflect on what is irrational about the woman’s self-evaluations.” “B. Advise her about how to manage her painful experiences.” “C. Summarize her emotions and her desire for change.” “D. Explore how she could Excellerate her role in relationships.”

“Answer to Q1 is C. Summarize her emotions and her desire for change.” “Explanation: Establishing a therapeutic relationship involves demonstrating empathy, understanding, and acknowledging the client's emotions. Summarizing her emotions and desire for change (Option C) helps validate her experiences and shows that the therapist is actively listening and comprehending her situation. This approach helps build rapport and sets the foundation for a collaborative therapeutic relationship. It also allows the client to feel heard and understood, creating a safe space for further exploration and intervention.” End of ChatGPT response ChatGPT selected answer C, which is considered the correct answer as per the trial set. The explanation indicates the presumed basis. I could have also asked ChatGPT to explain why the other answers weren’t as good as the selected answer. That would have gotten quite wordy and didn’t seem needed for this ad hoc analysis. A deeper study might want to consider getting a lengthier reply with a more elaborate explanation. We move to the second question: “Q2. What information should a mental health therapist explore to help the client remember her strengths?” “A. The client’s disclosure about her previous level of functioning.” “B. The client’s recollections of past mistakes. “C. The client’s feelings about her mother paying for counseling.” “D. The client’s use of cognitive distortions.”

“Answer to Q2 is A. The client’s disclosure about her previous level of functioning.” “Exploring the client's disclosure about her previous level of functioning (Option A) would be most relevant to identify and highlight her strengths. Understanding her past achievements, abilities, and positive aspects of her life before the onset of depressive symptoms can help the therapist and the client focus on resources that can be tapped into for recovery. This approach emphasizes a strengths-based perspective and encourages the client to recognize her capabilities, fostering a more positive outlook on her current situation.” End of ChatGPT response The answer indicated by ChatGPT is answer A, which is also the correct answer per the trial set. They are now two-for-two in terms of having gotten the questions right. Next, let’s see what happens with the third question: “Q3. How should a mental health therapist handle the client’s concern about confidentiality?” “A. Explain that as a mental therapist, they will share progress updates with the mother but not provide any details.” “B. Clarify what can and cannot be shared through informed consent.” “C. Request the mother’s assent to the treatment and obtain the client’s consent.” “D. Ask the client for a signed release that would include her mother as part of the treatment process.”

“Answer to Q3 is B. Clarify what can and cannot be shared through informed consent.” “The best approach is to clarify confidentiality issues through informed consent (Option B). The therapist should explain the limits of confidentiality, outlining what information can be shared and under what circumstances. This empowers the client to make an informed decision about what aspects of her treatment will remain confidential and what may need to be shared. It respects the client's autonomy and privacy while addressing concerns about the involvement of her mother in the therapy process.” End of ChatGPT response The answer indicated by ChatGPT is B, which is also the correct answer via the trial set. Three for three, so far. Here’s the fourth question: “Q4. From the perspective of the mental health therapist, what self-report from the client is a discernable criterion for the given diagnosis?” “A. Her disagreements with her mother.” “B. Her increased psychomotor agitation.” “C. Her difficulty managing her children.” “D. Her decreased pleasure in her activities.”

“Answer to Q4 is D. Her decreased pleasure in her activities.” “A discernible criterion for a major depressive disorder is often a pervasive and persistent decrease in pleasure or interest in previously enjoyed activities. This is commonly referred to as anhedonia. The client's report of not being able to remember the last time she enjoyed something and the cessation of an activity she used to find enjoyable (creating jewelry) aligns with this criterion. While other aspects, such as disagreements with her mother (Option A) or difficulty managing her children (Option C), may be related to her emotional state, the decreased pleasure in activities is a more specific indicator for depression.” End of ChatGPT response The answer indicated by ChatGPT is D, which is the correct answer according to the trial set. You will also note that this time the explanation opted to explain why the chosen answer was considered a better choice than some of the other proposed answers. We are at four of four in terms of getting the correct answers. Let’s see what occurs with the fifth question: “Q5. What criteria were likely used to determine the severity specified for the client’s major depressive disorder?” “A. The number of symptoms is not substantially in excess of those needed to make the diagnosis, yet functional impairment exists.” “B. The intensity of the symptoms is distressing but manageable, and results in minor impairment of social or occupational functioning.” “C. The intensity of symptoms is high and is accompanied by psychomotor agitation and mixed features associated with a major depressive episode.” “D. The number of symptoms and their intensity are distressing and unmanageable and interfere with the client’s social and occupational functioning.”

“Answer to Q5 is D. The number of symptoms and their intensity are distressing and unmanageable and interfere with the client’s social and occupational functioning.” “The severity specified for major depressive disorder is often determined by the number and intensity of symptoms, as well as their impact on the client's social and occupational functioning. In this case, the client reports feeling sad, empty, and anxious most days, experiences tension every day, has difficulty getting out of bed, and has ceased engaging in previously enjoyed activities. Additionally, she has lost her job, faces financial difficulties, and expresses guilt about her interactions with her children. These factors suggest a significant impact on both social and occupational functioning, aligning with the criteria for a more severe form of major depressive disorder.” End of ChatGPT response ChatGPT indicates the answer should be D, but the trial set indicates the correct answer is A. This is the first miss by ChatGPT in this set of sampled trial questions. I was curious whether ChatGPT could justify the answer D versus the answer A. One thing to keep in mind is that some generative AI apps such as ChatGPT tend to immediately back down when you challenge them about an answer. The AI will indicate that it was wrong and switch to the answer that you say is the correct answer. In a sense, this is somewhat disconcerting because you would generally prefer the AI to moderately push back and support an answer that was chosen. I am not saying this should be doggedly dogmatic. It certainly makes sense to have the AI back down if the user is telling the AI that the answer is wrong, though I think you can see how this might be a dangerous slope to slide upon. Just because a user says that something is wrong does not mean that it indeed is wrong. You can end up with the AI being too easily convinced of falsehoods simply because the user said they were truths. This is an ongoing issue of concern about generative AI, see my analysis at the link here. All in all, rather than telling the AI that it was wrong, which would possibly get it to collapse like a deck of cards, I came to the matter politely and tangentially. I merely asked ChatGPT to compare the answer D with the answer A. I didn’t tip my hand that the answer A is considered the correct answer. Here they go:

“Let's compare options D and A:” “D. The number of symptoms and their intensity are distressing and unmanageable and interfere with the client’s social and occupational functioning.” “A. The number of symptoms is not substantially in excess of those needed to make the diagnosis, yet functional impairment exists.” “The main distinction lies in the severity of functional impairment. Option D suggests that the number of symptoms and their intensity are distressing and unmanageable, leading to significant interference with social and occupational functioning. This aligns with a more severe form of major depressive disorder, where the impairment is substantial.” “On the other hand, Option A acknowledges functional impairment but does not emphasize the distressing and unmanageable nature of the symptoms. It leaves room for interpretation that the impairment might be present but not to the extent that it is distressing or unmanageable.” “In the context of major depressive disorder, severity is often determined not just by the presence of symptoms but also by the impact on daily functioning. Option D explicitly addresses the distress and interference with functioning, making it a more comprehensive and accurate description of a severe presentation of major depressive disorder.” End of ChatGPT response I’d bet that mental health professionals could readily debate the indication of ChatGPT about why one of the answers is construed as better than the other. Please do so at your leisure. Moving to the sixth and final question, take a look at this one: “Q6. From the perspective of the mental health therapist, what short-term goal would guide the treatment of the client’s presenting problem?’ “A. Spend one hour each day rewriting difficult childhood memories with her mother.” “B. Return to her previous sleep schedule to be more present with her children.” “C. Spend one hour each day journaling about her vision for her next marriage.” “D. Return to full-time work in nursing to boost her self-image and mood.”

“Answer is B. Return to her previous sleep schedule to be more present with her children.” “The most appropriate short-term goal would be to address the immediate issue affecting her daily functioning, which is her disrupted sleep schedule. Returning to her previous sleep schedule (Option B) can have a positive impact on her mood, energy levels, and ability to engage with her children. This goal is specific, achievable, and directly addresses a key factor contributing to her distress. It also aligns with improving her overall well-being and functioning in the short term.” End of ChatGPT response The answer selected by ChatGPT is answer B, which is the correct answer according to the trial set. Conclusion Please do not try to calculate the percentage of times that ChatGPT was able to correctly answer the sampled sample. Doing so would be ridiculous as this was not a rigorous experiment but instead an ad hoc one and meant solely to get the juices flowing about examining how generative AI might do on these kinds of tests. That being said, I believe there is enough indication that performing a thorough study would be worthwhile. Had the generative AI got none of the answers correctly I would probably be leaning toward suggesting that a full-on study wasn’t likely worth the effort. This snippet suggests that doing more might be valuable. There are flies in the ointment on these matters. One argument about having generative AI take these various types of tests is that there isn’t any human soul or human connection that is also being assessed, see my discussion at the link here. In other words, just because a generative AI app can answer questions on a dry test does not mean that they would want the generative AI to interact with real people in real life and provide advice as to whatever domain or realm of expertise is being tested. The argument is further amplified when considering the subject of mental health. Some would assert that only another human can adequately counsel another human. An AI system is not human and does not have human experience under its belt. A counterviewpoint is that notwithstanding humanness, there is still a place for AI to aid humans, including in the sphere of mental health guidance or advice. Let’s conclude this discussion for now by invoking a famous line. The renowned American psychologist Carl Rogers purportedly said this: “In my early professional years, I was asking the question, how can I treat, or cure, or change this person? Now I would phrase the question in this way, how can I provide a relationship that this person may use for their personal growth?” Can generative AI form a relationship with humans and if so, do they want that to be how mental health is conveyed or advised? More questions ostensibly need more answers; thus, the endeavor must continue. Agrawal is a pediatrician and gun safety advocate. One morning last summer, when I was seeing patients in a Bronx clinic, a nurse told me to lock myself in an exam room. A patient's father was looking for me, angered about my report to child protective services. Even though he eventually left, my chest cramped when I learned that he planned to return. Just weeks before, a doctor in another state had been shot dead by a patient; and very recently, there had been three shootings near the clinic where I was working. I relayed my safety concerns to a clinic administrator and was handed a small silver plastic whistle. Not long after, I decided to resign. A year ago, Surgeon General Vivek Murthy, MD, MBA, sounded the alarm on workplace violence in healthcare as a contributor to skyrocketing health worker burnout and resignation. Despite his national advisory calling for zero-tolerance violence policies, the issue of gun violence in healthcare remains underreported and unchecked, particularly in clinics and other non-hospital settings. From 2010 to 2020, the Joint Commission, the largest standards-setting and accrediting body in healthcare, received 39 reports of hospital shootings. Most were staff shot by patients. While the commission issued updated healthcare workplace violence prevention standards in 2022, they are mostly directed towards hospitals, leaving health workers in many outpatient facilities unprotected. One healthcare facility shooting took place per week in July 2023, with two occurring at outpatient clinics. Three were intimate partner violence related. On July 11, 2023, a disturbing notification popped up on my cell phone, "Patient shoots, kills orthopedic surgeon in clinic." Benjamin Mauck, MD, a 43-year-old specialist in childhood hand deformities and father of two young children, had been shot dead in a Tennessee clinic exam room, allegedly by patient Larry Pickens. Law enforcement characterized the shooting as an isolated "one-on-one interaction"; the public was advised to say something if they see something suspicious in the future. Yet, less than 1 week before and just 7 miles away, an affiliate orthopedic clinic had alerted police about concerning behavior by Pickens, as did his stepfather for violent behavior in 2016. But none of these red flags helped protect Mauck from getting shot. Less than 2 weeks after Mauck's death, 44-year-old hospital security guard Bobby Smallwood was shot to death on an Oregon hospital maternity unit. Then, 3 days later, a physician was found with a gunshot wound on the grounds of a medical building in Cedar Hill, Texas. The shooter's girlfriend worked in the same building and may have been the intended target. The Department of Homeland Security (DHS) describes healthcare shootings as "unpredictable," but research indicates that there are some patterns. For example, a 2019 study of 88 acute care hospital shootings found that most occurred in the summer; winter was the second most violent season. A study of physician involved shootings found most were related to dissatisfaction with health outcomes. According to StatPearl, outpatient clinics are the second most dangerous site for healthcare shootings, next to the emergency department. While there have been efforts to make healthcare safer, they often aren't backed by science and may worsen health inequities. A recent JAMA article evaluated armed officers in hospitals and found that 17 patients were shot by hospital security from 2009 to 2022. Of those patients shot, most were Black and/or exhibited signs of mental instability. Some hospitals now flag patients' charts to alert staff to potentially violent behavior. Not surprisingly, a study published in JAMA found that Black patients were flagged more than white patients using this system, and suffered longer wait times, which can increase risk for healthcare violence, according to the Occupational Safety and Health Administration. Recently, the International Association for Healthcare Security and Safety proposed new guidelines for weapons screening, including metal detectors and "amnesty boxes" for voluntary firearm storage at hospital entrances. While a study found that metal detectors were effective in confiscating weapons, whether they reduced healthcare violence is unknown. This year, the Senate proposed the bipartisan Safety from Violence for Healthcare Employees Act, making assaulting healthcare workers a federal crime. Similar legislation has been proposed by the House and endorsed by the American Hospital Association. However, in states that have increased penalties, there is no evidence to support effectiveness. So, what next? Are any interventions effective? Extreme Risk Protection Orders, also known as "red flag" laws, offer a promising, evidence-informed tool for suicide, mass shooting, and homicide prevention. Through a non-criminal legal process, a patient at risk of harm to themselves or others can be temporarily prevented from possessing and purchasing firearms. While available in 21 states and the District of Columbia, and to health professionals in six states, red flag laws are underutilized. The Bipartisan Safer Communities Act offers federal funding to support state implementation. What can we, as healthcare professionals, do? First, they must speak up, for their profession and their patients. They can promote understanding and ways to reduce healthcare rage, before criminalizing patients. They can ensure healthcare organizations invest in gun violence prevention strategies that are evidence-based, and that prioritize their safety over industry profits. They can't afford to wait. Nina Agrawal, MD, is a pediatrician in New York City. She leads gun safety advocacy for the American Medical Women's Association and New York State-American Academy of Pediatrics. A Robot Medical ProfessionalExperts generally agree that, before they might consider artificial intelligence (AI) to be truly intelligent —that is, on a level on par with human cognition— AI agents have to pass a number of tests. And while this is still a work in progress, AIs have been busy passing other kinds of tests. Xiaoyi, an AI-powered robot in China, for example, has recently taken the national medical licensing examination and passed, making it the first robot to have done so. Not only did the robot pass the exam, it actually got a score of 456 points, which is 96 points above the required marks. This robot, developed by leading Chinese AI company iFlytek Co., Ltd., has been designed to capture and analyze patient information. Now, they've proven that Xiaoyi could also have enough medical know-how to be a licensed practitioner. Local newspaper China Daily notes that this is all part of the country's push for more AI integration in a number of industries, including healthcare and consumer electronics. China is already a leading contender on the global AI stage, surpassing the United States in AI research, in an ultimate effort to become a frontrunner in AI development by 2030. The country's determination, driven by the realization that AI is the new battleground for international development, could put the U.S. behind China in this worldwide AI race. AI, Robots, and the Future of HealthcareWith both governments and private companies intent on putting AI to good use, one of the first fields in which AI technologies are being applied has been medical research and healthcare. Most are familiar with IBM's Watson, which has made significant headway in AI-assisted cancer diagnosis and in improving patient care in hospitals. Then there's Amazon with the Echo and AI-powered virtual assistant Alexa, which has been present in the healthcare field for a while now. Similarly, Google's DeepMind Health is working on using machine learning to supplement healthcare processes in the United Kingdom. In the same manner, iFlytek plans to have Xiaoyi assist human doctors in order to Excellerate their efficiency in future treatments. "We will officially launch the robot in March 2018. It is not meant to replace doctors. Instead, it is to promote better people-machine cooperation so as to boost efficiency," iFlytek chairman Liu Qingfeng told China Daily. Concretely, iFlytek's vision is to use AI to Excellerate cancer treatment and help to train general practitioners, which China is sorely in need of. "General practitioners are in severe shortage in China's rural areas. They hope AI can help more people access quality medical resource," Qingfeng added. In short, there's no need to fear an AI takeover in the medical field, even though many worry that such advances will eliminate human jobs. In this case, it is quite the opposite, because this AI will work to augment the capabilities of its human counterparts instead of replace them. So, at least for now, you don't have to worry about being referred to a robot doctor.

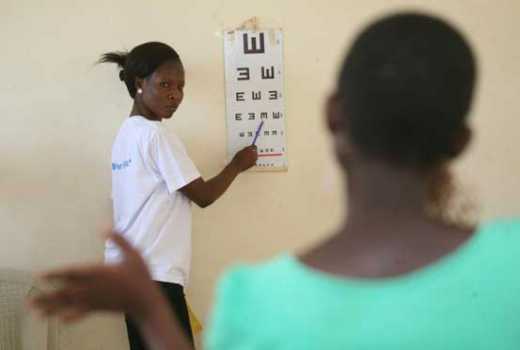

NAIROBI, KENYA: All new drivers will now be required to take a medical exam before they are issued with licences. They will also have to be tested again after some time. The medical tests will determine the quality of drivers' eyesight before they can be allowed on the road. These are among a series of stringent rules and regulations contained in the National Transport and Safety Authority's (NTSA) new curriculum for individuals seeking to be trained as drivers. The medical test will be repeated for licence holders every 10th year of holding the licence and the drivers will repeat the training, sit for an exam and obtain a competence certificate before being issued with a new licence. At the same time, before being issued with a provisional licence for admittance in a driving school, all motorists - motorcycles, tricycles, public service vehicles, heavy vehicles, trucks, and light-weight vehicles - should submit a medical report on their health. The report should not be more than six months old. Those who are aged 60 years and above must submit a medical report annually to facilitate renewal of their licences, a provision that the National Disaster Management Unit (NDMU) has heavily criticised. Main causes "Age is not among the main causes of road traffic accidents. There are many drivers who are over 50 years with clean records and only a few, maybe, with bad ones," said NDMU Deputy Director Pius Masai. Mr Masai added that the transport authority should address the main causes of accidents, which have been linked to human error, dangerous and reckless driving, and speeding. In the curriculum released on January 3, NTSA seems to be out to ensure that no aspect of the transport sector is left unattended. Those who choose to be amateur drivers before they are absorbed into driving schools will not be admitted if it is found that they have been involved in an accident in the previous 24 months. According to NTSA Director General Francis Meja, the document will "set standards among drivers and instill professionalism in the industry". The curriculum has roped in not just learners, but instructors and driving schools with strict conditions that should be met before one is issued with a driving licence. Under the curriculum, a learner shall only be deemed to have fulfilled the training requirements if they have attended at least 75 per cent of the classes. Schools shall keep updated attendance register. Stay informed. Subscribe to their newsletter However, in practical training, one must attend 100 per cent of the classes to be deemed to have completed the course. “Theory training may be provided online, provided an online attendance and assessment register is maintained,” reads the document in part. Only learners who have attained a minimum aggregate score of 70 per cent in the school's final test shall be presented for examination. If you fail, you must go back to class where re-testing should be done within 21 days. “That a learner who fails in theory examination shall be required to re-take the entire examination while one who fails a practical exam shall be required to re-sit the practical within a period not exceeding six months,” the new curriculum directs. “A candidate who fails to take the re-test shall be required to register afresh and re-do the course.” Training for a moped or any mini-motorcycle below the engine capacity of 50cc, with no passengers, requires one to be at least 16 years old. Anything above 50cc to 400cc with load capacity not exceeding 60kg means one must be 18. For the higher type of motorcycle categorised as A3 - often used as couriers and include three wheelers - the minimum age is 21 and one needs at least one year's experience. For the first time, truck drivers will be trained through a mainstream process. At the moment, there are no specific schools that teach truckers; they mostly learn through apprenticeship. To drive a 14-seater matatu, one must be at least 22 years old. If you want to upgrade, then you be tested in both theory and practicals, and pass. (MENAFN- EIN Presswire) Dr. Vijay Naik, Owner of Survivors exam Preps, Reviews the Best Ways to Study for the USMLE Exam BOSTON, MA, UNITED STATES, January 4, 2024 /EINPresswire / -- Dr. Vijay Naik, founder of Survivors exam Prep, is a medical professional who has made it his mission to prepare and support aspiring doctors and physicians. With a deep understanding of the rigorous journey that lies ahead for these students, Dr. Naik founded Survivors exam Prep to offer personalized guidance and mentorship. His program has been developed based on reviews and feedback from current and previous students, ensuring that the curriculum is tailored to their needs. Dr. Naik's dedication to helping students successfully pass their USMLE exam is backed by his own experiences and the challenges he faced on his path to becoming a doctor. With Survivors exam Prep, students can feel confident that they will have the support and resources they need to thrive in their medical careers. Dr. Vijay Naik has reviewed his previous students' success and compiled three essential tips to help them pass the exam with flying colors. Understand the fundamental reasoning behind all medical information. Dr. Naik advises that by comprehending where terms come from and their function that serves a bigger purpose, students can apply the knowledge in a more effective and memorable way. Dr. Vijay Naik's tips may make the difference between success and failure for USMLE exam takers. In a recent review a previous student said, “Dr. Naik does a great job at explaining and teaching the toughest and biggest concepts in medicine. He drills you throughout the course to ensure you retain these high-yield concepts. He is a wizard when it comes to breaking down questions, and a large part of why this course is so special is the test-taking skills and principles of management that he drills into you during the course. The combination of understanding the big concepts in GI, NEURO, CARDIO, RESP, and OBGYN to name a few, coupled with the test-taking skills is what helped to boost my exam score by over 20 points.” Developing a deep and thorough understanding of medical terminology is essential for anyone pursuing a career in the healthcare industry. Fortunately, Dr. Vijay Naik offers some valuable tips for students, including the importance of consistent quizzing and testing. It's not enough to simply memorize medical terms; students must also understand how to apply them in real-life situations. By taking the time to quiz themselves and ask questions, they can develop a deep knowledge of the subject matter. Additionally, Dr. Naik also teaches test-taking skills that can help reduce stress and ensure proper time management during rigorous exams like the USMLE. With these tips in mind, students can feel confident and well-prepared as they move forward in their healthcare careers. Dr. Naik is a committed and dedicated medical professional who has made it his mission to support aspiring students in their journey to becoming successful medical practitioners. With access to a wealth of high-quality resources and up-to-date information, Dr. Naik utilizes a personalized approach to ensure that students have the tools and skills they need to succeed. His Survivors exam Prep program focuses on the unique needs of each candidate, providing essential practice materials and personalized study plans. What's more, Dr. Naik's commitment to staying up-to-date with the latest medical industry trends through updated lessons and a supportive community makes his program a trustworthy choice for anyone preparing to take the USMLE. His dedication, perseverance, and unerring belief in his students' potential make him an exemplary leader and mentor to aspiring medical professionals. Jon Smith MENAFN04012024003118003196ID1107683177 Legal Disclaimer: Top 5 No-Exam Life Insurance CompaniesThese are their picks for the top five life insurance companies that offer no-exam coverage: What Is No-Exam Life Insurance?A no-exam life insurance policy does not require applicants to complete a medical exam to be considered for approval. Rather, it follows an accelerated underwriting process to approve applicants quickly. Common no-exam options include term life, whole life and final expense. There are two types of no-exam life insurance: simplified-issue and guaranteed-issue. Simplified-issue life insurance policies do not require a medical exam but still ask questions about your health and base approval on your answers. By contrast, guaranteed-issue life insurance policies require no exam and ask no health questions, meaning eligible applicants are guaranteed coverage. Of the two, guaranteed-issue life policies are usually more expensive. If you are worried about the effect that chronic illness or other health issues might have on your policy premiums, or if you have been denied regular coverage in the past, no-exam coverage might be your best option. However, it is costlier than a regular policy due to the increased risk the insurer takes on by not knowing your medical history. Pros of No-Exam Life Insurance

Cons of No-Exam Life Insurance

How Much Does a No-Exam Life Insurance Policy Cost?The cost of a no-exam policy varies based on the individual. However, when you hold all other factors constant, a no-exam policy will be more expensive than a traditional life insurance policy. An insurance provider assumes more risk with a no-exam policy because it cannot easily estimate when or if it will have to make a death benefit payout. Your coverage limits and chosen policy type significantly affect how much you will pay for the policy. For instance, if you opt for a policy with a 10-year term and a $30,000 death benefit, it will cost less than a policy with a 10-year term and a $50,000 death benefit. Insurers look at other factors when determining your premiums, including age, gender, lifestyle, hobbies, health status and driving history. If you are a smoker or have high-risk hobbies like rock climbing, you will generally pay more for a policy. In some instances, insurers charge more to insure men than women — because women statistically live longer on average — but pricing does not change based on gender in all states. Since every insurer evaluates applications differently, they recommend that you shop around and compare rates for the best deal. Is No-Exam Life Insurance Worth It?The major advantages of no-medical-exam life insurance policies include the ability to get coverage without disclosing details about your health or to get coverage quickly — sometimes instantly. Life insurance without an exam can be worth it if you suffer from any chronic medical conditions, have been denied traditional life insurance in the past or need coverage fast. However, it’s important to note that lying on your application could be considered insurance fraud, and insurers can still access your health history during the underwriting process without a physical exam. Due to the inherent risk of offering life insurance without insight into your health, insurers tend to provide only limited coverage at expensive rates if you choose a no-exam policy. If you are in good health, need a sizable death benefit or are shopping on a tight budget, no-exam life insurance may not be worth looking into. Our Conclusion on The Best No-Exam Life Insurance CompaniesWhile traditional life insurance can offer lower rates and more comprehensive coverage, no-exam life insurance has a swift application process that provides coverage without requiring you to undergo a physical health exam. However, each policy has its benefits and limitations. Your coverage needs, timeline and budget can help determine the right policy for you. They gathered their top six providers offering no-exam life insurance coverage in this article. They recommend you gather quotes from at least three providers before deciding on a policy. Our goal at the Guides Home Team is to provide you with comprehensive, unbiased recommendations you can trust. To rate and rank life insurance companies, they created a thorough methodology and analyzed each company by combing through online policy information, speaking to agents via phone, reading customer reviews for insight into the typical customer experience, and reviewing third-party financial reliability scores. After collecting this data, they scored each company in the following categories: coverage, riders, availability and ease of use and brand trust. To learn more, read their full life insurance methodology for reviewing and scoring providers. | ||||||||

DHORT education | DHORT exam contents | DHORT questions | DHORT study help | DHORT exam format | DHORT learning | DHORT learner | DHORT exam syllabus | DHORT plan | DHORT syllabus | | ||||||||

Killexams exam Simulator Killexams Questions and Answers Killexams Exams List Search Exams |

DHORT Reviews by Customers

Customer Reviews help to evaluate the exam performance in real test. Here all the reviews, reputation, success stories and ripoff reports provided.

100% Valid and Up to Date DHORT Exam Questions

We hereby announce with the collaboration of world's leader in Certification Exam Dumps and Real Exam Questions with Practice Tests that, we offer Real Exam Questions of thousands of Certification Exams Free PDF with up to date VCE exam simulator Software.